Doubao releases two video generative models! Multiple vertical models are newly launched, and the research and development of basic models is in full swing!

Doubao releases two video generative models! Multiple vertical models are newly launched, and the research and development of basic models is in full swing!

日期

2024-09-24

分类

技术发布

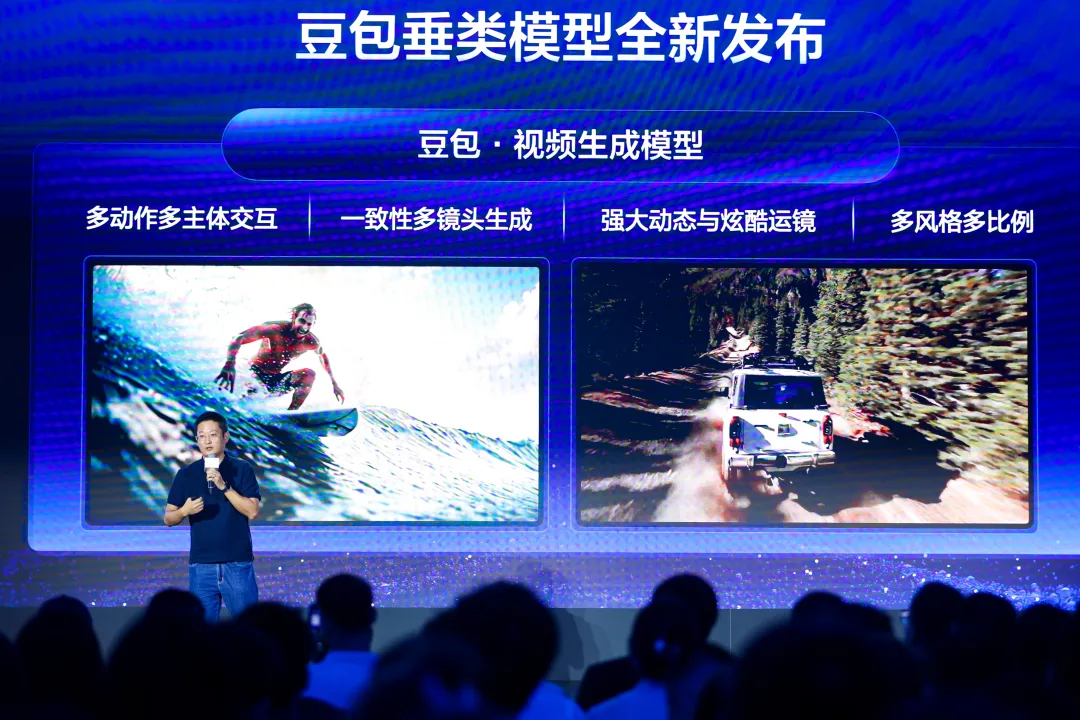

The Doubao Video Generative model was released on September 24th at the Shenzhen Station of the Volcano Engine AI Innovation Tour. The model adopts efficient DiT fusion computing units, allowing for more thorough compression of encoded video and text. The newly designed diffusion model training method brings consistent multi-lens generation capability, and the deeply optimized Transformer structure significantly boosts the diversification of video generation.

The Doubao Music Model and Doubao Simultaneous Interpretation Model were also released at the event, along with an upgrade to the Doubao LLM Family. This article introduces the highlights of this release.

On September 24th, the 2024 Volcano Engine AI Innovation Tour Shenzhen Station showcased the latest progress of the Doubao large language model.

The event featured the release of the Doubao Video Generative Model, the Doubao Music Model, and the Doubao Simultaneous Interpretation Model.

In addition, the Doubao General Model Pro and vertical models, such as the text-to-image model and the speech synthesis model, have also been upgraded.

1. The release of the two video generative models offers a cinematic visual experience.

This event introduces two large language models (LLM), Doubao Video Generation-PixelDance and Doubao Video Generation-Seaweed, which are now available for testing in the enterprise market.

The efficient DiT fusion computation unit, which can compress encoded video and text more thoroughly, coupled with the newly designed diffusion model training method, enhances the capability to maintain consistency in multi-camera switching. On top of that, the team has also optimized the diffusion model training framework and the Transformer structure, significantly improving the diversification of video generation.

These technical advantages can be seen in the following aspects:

- Accurate semantic understanding and interactions with multi-subjects and actions.

The Doubao video generative model can follow complex user prompts and accurately understand semantic relationships; it can also follow sequential multi-lens action instructions and support interactions among multiple subjects.

Prompt: A Chinese man takes a sip of coffee, and a woman walks up behind him.

Prompt: A foreign man and woman with long hair galloping on horses

- Powerful dynamic presentations and amazing camera movements take us beyond PPT animations.

Camera movement is one of the keys to video language. The Doubao video generative model can spectacularly switch between dynamic subjects and various camera angles. It features capabilities like zooming, surrounding, panning, scaling, and target following, flexibly controlling the viewing angle to deliver a real-world experience.

Prompt: An Asian man is swimming with goggles on, and behind him is another man in a wetsuit.

Prompt: A woman takes a sip of coffee, and then walks out carrying the coffee and holding an umbrella.

- Consistent consistent multi-lens generation, telling a complete story in 10 seconds.

Consistent multi-lens creation is a special feature of the Doubao video generation model. Within a single prompt, you can switch between multiple lenses while maintaining consistency of subject, style, and atmosphere.

Prompt: A girl gets out of a car, with the setting sun in the distance.

Prompt: A foreign man surfing and giving a thumbs-up to the camera

- High Fidelity, highly aesthetic, supporting multiple styles and ratios

The Doubao video generative model supports a wide variety of themes and styles, including black-and-white, 3D animation, 2D animation, traditional Chinese painting, watercolor, and gouache. At the same time, the model covers six ratios: 1:1, 3:4, 4:3, 16:9, 9:16, and 21:9, fully adapting to scenarios such as movies, TV, computers, and mobile phones.

Prompt: dreamlike scenario, a white sheep with curly horns

Prompt: Bird in ink painting style, 16:9 ratio

2. Brand-new music model and simultaneous interpretation model to meet diverse needs

Along with video generative models, Doubao also released its new music model and simultaneous interpretation models.

- Doubao Music Model

Doubao's music model adopts a unique technical solution to build a unified framework that adapts to musicians' evolving workflows, generating high-quality music from the three aspects of lyrics, composition, and singing.

With just a few words, Doubao can generate lyrics with precise emotional expression. It also offers over 10 different music styles and emotional expressions, making the melody more diverse.

The model also supports multiple creation methods, including picture-to-music, inspiration-to-music, and lyrics-to-music, lowering the barrier to creation and making "playing" music accessible to everyone, not just a slogan.

- Doubao Simultaneous Interpretation Model

The Doubao Simultaneous Interpretation Model adopts end-to-end architecture to ensure high-precision, high-quality translation with lower latency. It also supports voice cloning, comparable to live interpreting, and often outperforms humans in many professional scenarios.

Simultaneous Interpretation Demo - "Former Ode on the Red Cliffs"

3. Multiple models upgraded, substantial improvements in overall performance, stronger in specific scenarios

In addition to the release of the brand new model, the Doubao LLM family has also been upgraded.

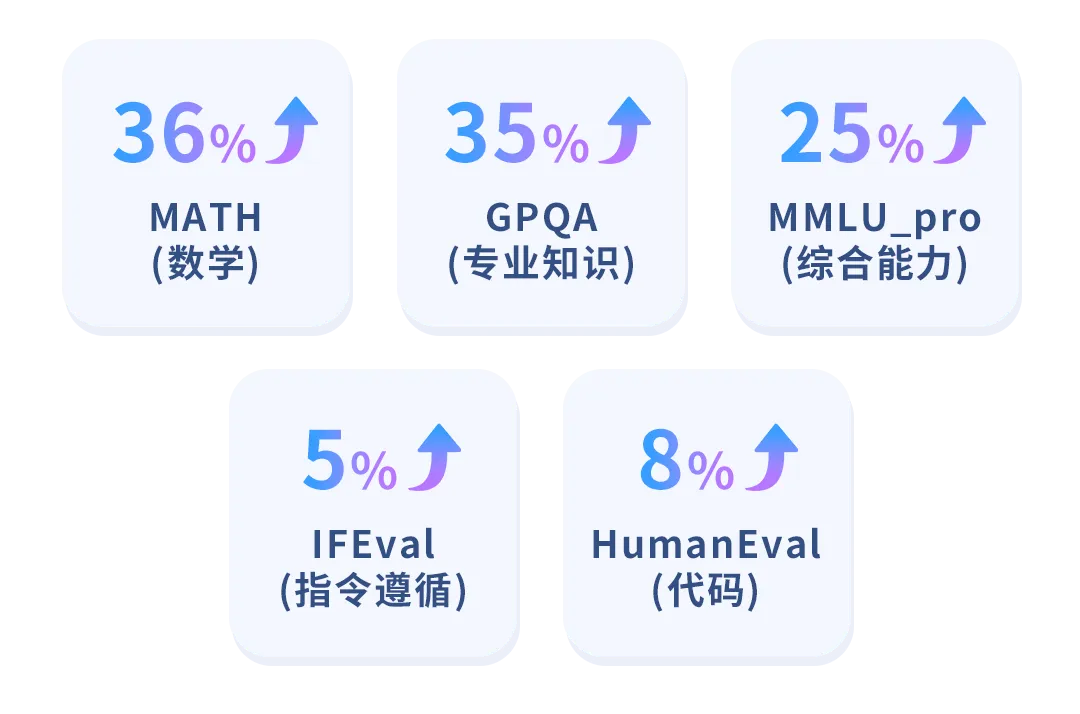

The flagship model, "Doubao General Model Pro," was upgraded at the end of August, with its overall capabilities on the MMLU-pro Datasets improving by 25% and leading peers domestically in all aspects.

In particular, the mathematical and professional knowledge capabilities have increased by over 35%, and capabilities such as instruction following and coding are also continuously improving. Doubao is now more adept at handling complex work and production scenarios.

It is worth mentioning that the context window of the Doubao general model pro has also been upgraded. The original pro 4k version will be upgraded directly to 32k, while the original 128k version will be upgraded to 256k. The new window size can handle about 400,000 Chinese characters, allowing you to read "The Three-Body Problem" in one go.

The speech synthesis model has also been upgraded, featuring enhanced mixing capabilities.

This capability is based on Seed-TTS, allowing for the mixing of voices with different roles and characteristics. The final output sounds very natural, with performance in coherence, sound quality, rhythm, and breathing comparable to real human voices. It can be applied in scenarios such as immersive audiobooks, companion AI interactions, and voice navigation.

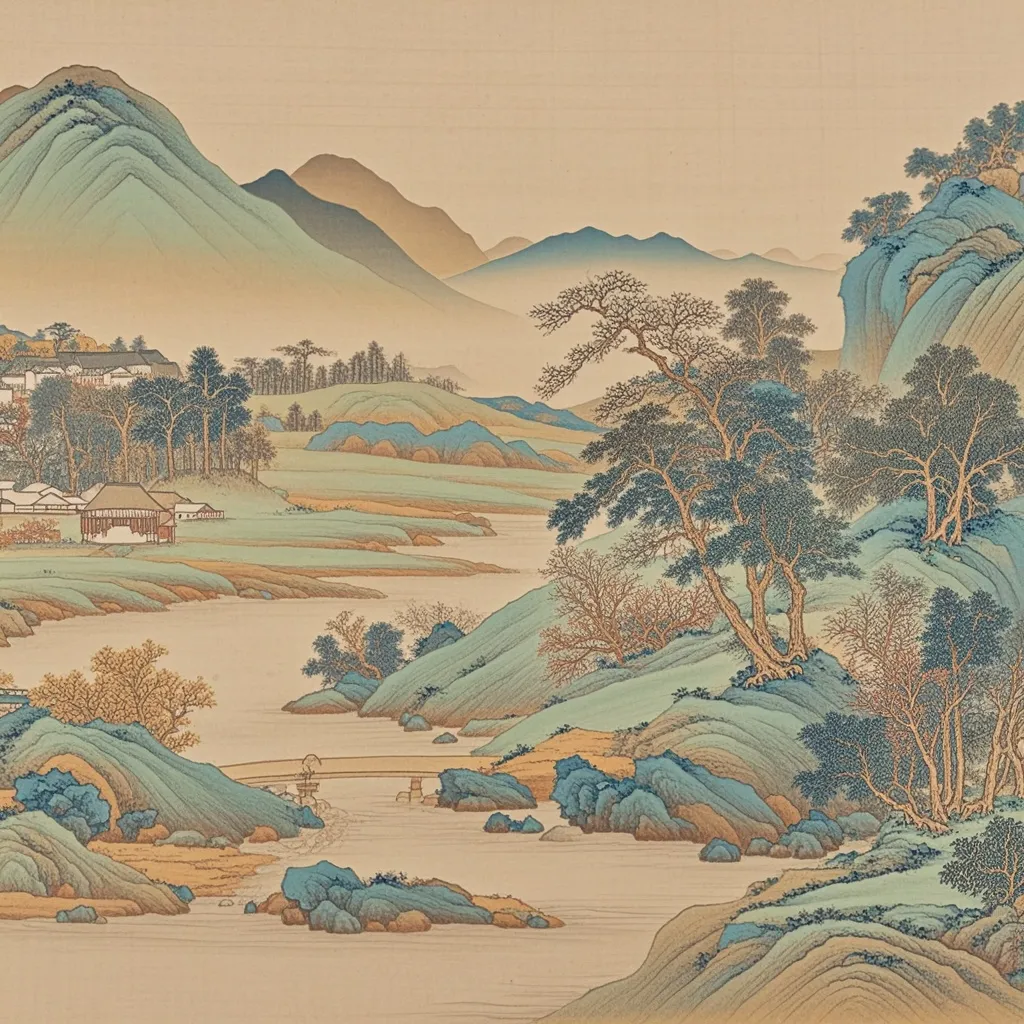

The text-to-image model has also been iterated.

This version of the model adopts a more efficient DiT architecture, significantly improving the model's inference efficiency and overall performance. It is particularly outstanding in complex text-image matching, understanding Chinese culture, and rapid image generation.

Specifically, the new model can accurately present the text-image matching relationships in various complex scenarios.

For real-world scenarios, it can achieve precise matching in six challenging areas of text-image generation: quantity, attributes, size, height, weight, and motion, making the generated content more consistent with the laws of the physical world.

Prompt: A bearded man holding a laptop, and a woman with red curly hair holding a tablet.

For imaginative scenarios, the model demonstrates stronger capabilities in concept combination, character creation, and virtual space shaping, excelling particularly in generating visual images for fantasy novels and creative designs.

Prompt: Cartoon-style illustration of a husky wearing a light blue baseball cap and sunglasses, holding a coffee in one hand and with the other hand in its pocket. It is sitting cross-legged in front of a coffee shop.

The model has also been upgraded in understanding and presenting ancient Chinese painting arts, better expressing different techniques such as Gongbi (meticulous) painting and Xieyi (freehand) painting.

Prompt: Draw a landscape painting in the style of "A thousand miles of rivers and mountains."

In terms of engineering, the team has optimized the entire engineering pipeline. With the same parameters, the inference cost is 67% of Flux, and the model can generate images in as fast as 3 seconds.

4. In Conclusion

As of September, the daily average token usage of the Doubao large model exceeded 1.3 trillion, with an overall growth of more than tenfold in four months.

Looking back at this release, from the launch of video generation models, music models, and simultaneous interpretation models, to the upgrades in text-to-image and speech synthesis, the Doubao LLM family has become more diverse, with continuous enhancements in model capabilities, laying a solid foundation for multimodal and diversified applications.

Behind this is the Doubao (Seed) team's full investment and effort in foundational model research and development, based on ByteDance's rich business scenarios.

The Doubao (Seed) team will continue to advance the continuous upgrade and iteration of model capabilities, bringing more surprises to the industry. Stay tuned.

To learn about team recruitment information, click to apply and join the Doubao (Seed) team. Work with outstanding people on challenging projects!